TVQA Dataset - Download and Description

1. Annotations (questions, answers, etc)

Download link: tvqa_qa_release.tar.gz [15MB]

md5sum: 7f751d611848d0756ee4b760446ef7cf

tvqa_

| File | #QAs | Usage |

|---|---|---|

| tvqa_ |

122,039 | Model training |

| tvqa_ |

15,253 | Hyperparameter tuning |

| tvqa_ |

7,623 | Model testing. Labels are not released for this set, please upload your predictions to the server for testing. |

Note, the original test set described in the TVQA paper is split into two subsets, test-public (7,623 QAs) and test-challenge (7,630 QAs). The test-public set is used for paper publication and is shown in the leaderboard, the test-challenge set is reserved for future use.

Each line of these files can be loaded as a JSON object, containing the following entries:

| Key | Type | Description |

|---|---|---|

| qid | int | question id |

| q | str | question |

| a0, ..., a4 | str | multiple choice answers |

| answer_idx | int | answer index, this entry does not exist for test_public |

| ts | str | timestamp annotation. e.g. '76.01-84.2' denotes the localized span starts at 76.01 seconds, ends at 84.2 seconds. |

| vid_name | str |

name of the video clip accompanies the question. The videos are named following the format

'{show_ |

| show_name | str | name of the TV show |

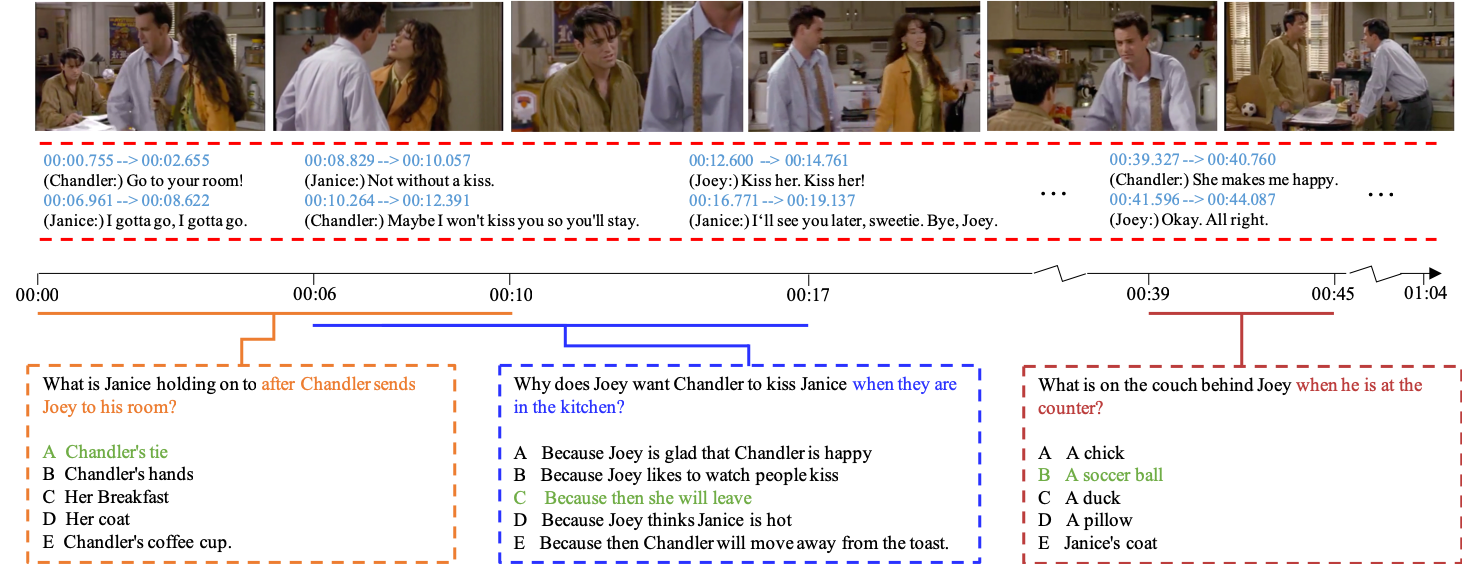

A sample of the QA is shown below:

{

"a0": "A martini glass",

"a1": "Nachos",

"a2": "Her purse",

"a3": "Marshall's book",

"a4": "A beer bottle",

"answer_idx": 4,

"q": "What is Robin holding in her hand when she is talking to Ted about Zoey?",

"qid": 7,

"ts": "1.21-8.49",

"vid_name": "met_s06e22_seg01_clip_02",

"show_name":

}

2. Subtitles

Download link: tvqa_subtitles.tar.gz [14MB]

md5sum: 70094363db36357f4ad4f52ae68a0af8

tvqa_

... 9 00:00:19,275 --> 00:00:20,775 (Ted:)Dude, that's my girlfriend. 10 00:00:20,776 --> 00:00:25,146 (Barney:)Point is, we are taking her and The Arcadian down. 11 00:00:25,147 --> 00:00:26,548 (Barney:)Am I right, Teddy Westside? 12 00:00:26,549 --> 00:00:28,300 (Ted:)You know it. Ha-ha! 13 00:00:28,301 --> 00:00:30,118 (Lily:)Okay. See, that's so weird to me. ...

3. Video features

3.1 ImageNet feature, download link: tvqa_

tvqa_ file contains a HDF5 file.

The 2048D features are extracted using ImageNet pretrained ResNet-101 model, at pool5 layer.

For each clip, we use at most 300 frames. If the number of frames exceeds, downsampling is applied:

downsample_

To download the files stored in Google Drive, we recommend you to use command line tools such as

gdrive.

3.2 Visual concepts feature, download link:

det_

det_ file contains a Python dict with 'vid_name' as keys, each value is a list of sentences,

each sentence contains the detected objects and attribute labels of a single frame from a

modified Faster R-CNN trained on Visual Genome. Note this feature is also downsampled

as the ImageNet feature.

3.3 Regional visual feature: please follow the instructions here to do the extraction. Currently, we do not plan to release it, due to its size.

4. Video frames

Download link: tvqa_video_frames_fps3_hq.tar.gz [43GB], please fill out this form first

The video frames are extracted at 3 frames per second (FPS), we show a sample of them below. To obtain the frames, please fill out the form first. You will be required to provide information about you and your advisor, as well as sign our agreement. The download link for the video frames will be sent to you in around a week if your form is valid. Please do not share the video frames with others.